A major bank's Data Science and Artificial Intelligence team faced the challenge of turning vast but messy payments data into a valuable asset.

The data was amassed by the back-office payments team, containing 50 to 75+ million records, often stored in CSV files. This data proved messy and unstructured, riddled with spelling errors, various company name formats, partial addresses, URL inconsistencies, and more.

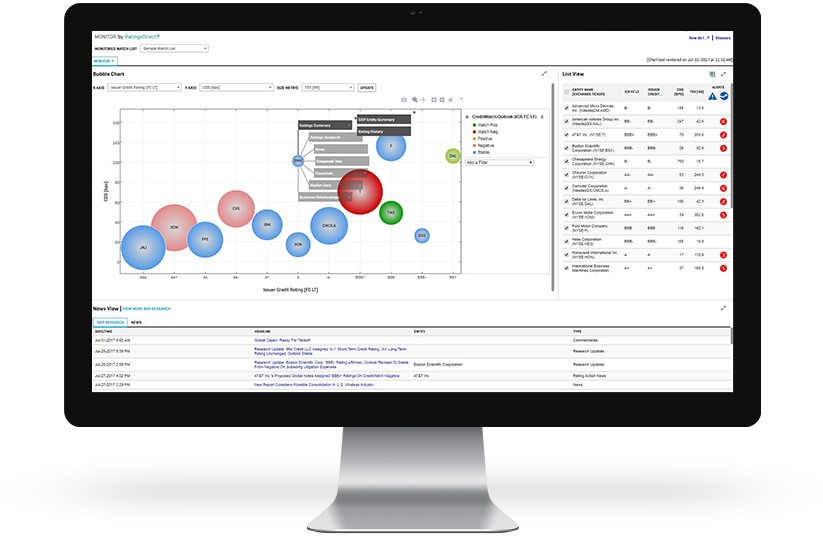

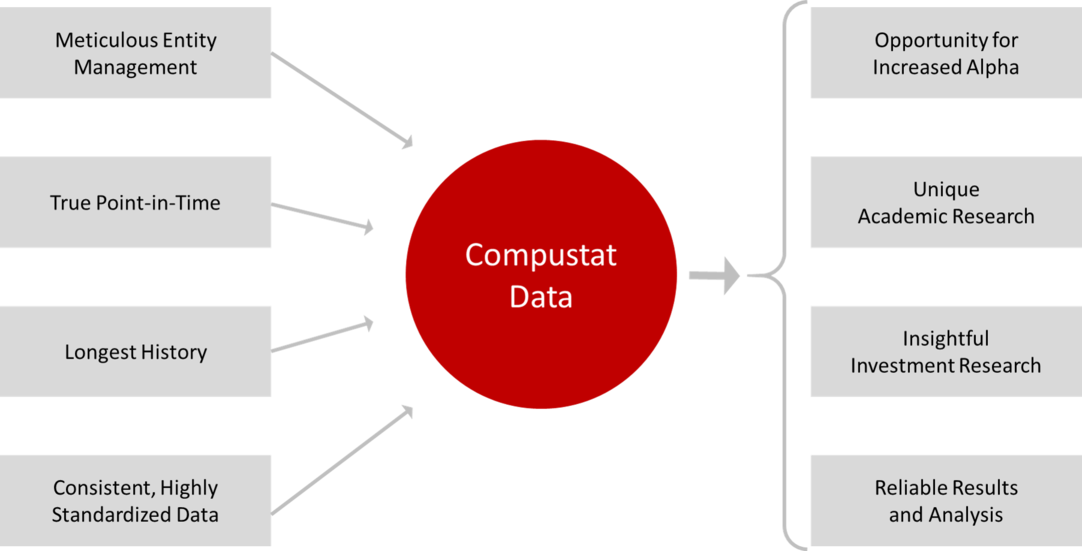

The challenge was two-fold: first, to cleanse, standardize, and connect this data for cross-selling opportunities, and second, to prove its worth. They decided to use Kensho Link, a machine learning-based solution to systematically cleanse and standardize the data through a common S&P Capital IQ entity-level identifier. This created a single master hierarchy file, linking disparate data feeds.

The testing phase was a success, with Kensho Link's logic producing reliable results. The bank embraced the solution, benefiting from automation in data consolidation and validation. They could process thousands of records efficiently and scale for anticipated data growth. The standardized profiles supported the bank's cross-sell initiatives, and data feeds were consolidated for high-quality reference data across asset classes.